If a student was to ask you what was the most important piece of technology ever invented, what would you say? Hopefully you’d use it as a conversation starter, and see what they knew. Things that would likely come up would be fire, the wheel, agriculture, and plumbing. All of these could vie for the top spot. But one of the most powerful technologies ever invented isn’t even taught as a technology, or even an invention. Is it because we take it for granted, or because it’s invented by non-European cultures?

Before Europe had the numbers we have today, they had Roman numerals. The Romans were great at lots of things, but their arithmetic, bluntly, sucked. Quick, what’s XIX times LVIII? Clearly they didn’t do math using these clunky numbers; doubtless merchants had abaci that allowed faster calculations, but there was no way to record the calculations. The Greeks, so famous for their math, didn’t have a better numeral system. In any case, Greek mathematicians focused on geometric proofs, and considered arithmetic a trivial matter for members of the lowly merchant class.

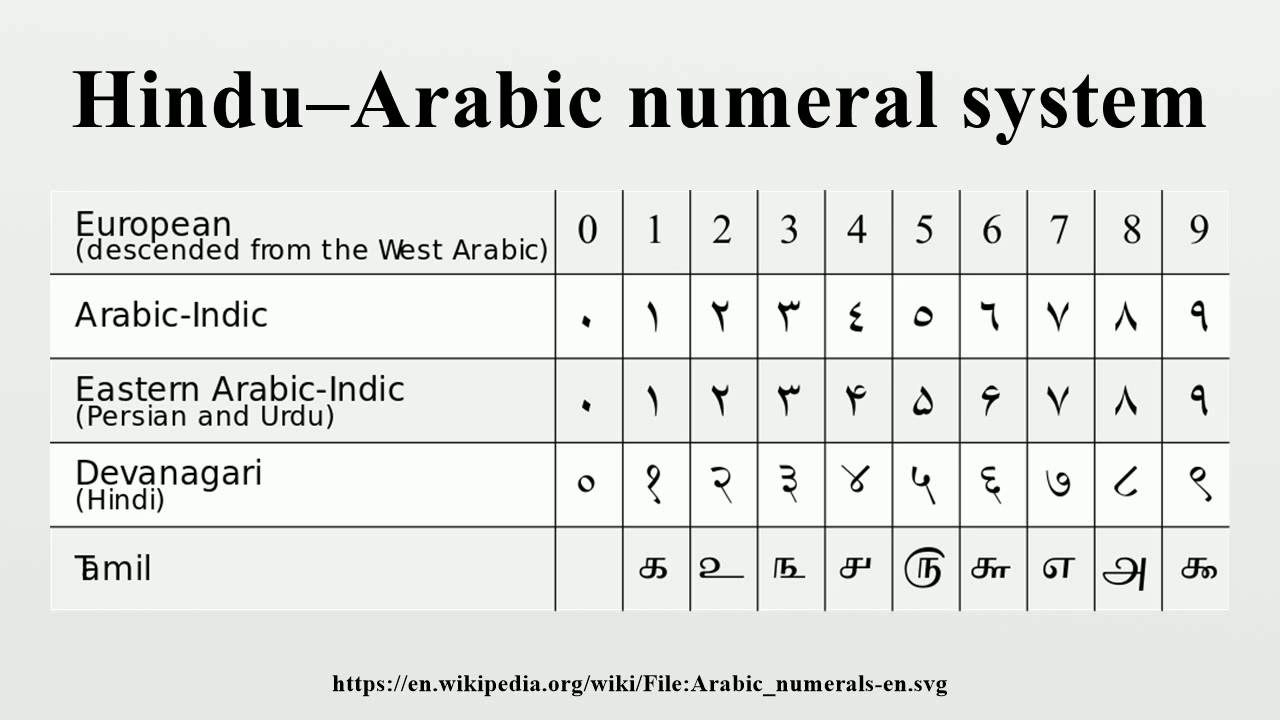

At least as far back as 700 BCE Hindu mathematicians had to record what would have been stupendously large numbers for their cosmology; for example their estimate of the life of the universe is 4.3 billion years, which given what we know today is a pretty good guess. They needed a way to represent these numbers. Originally they gave different numbers names, like “veda” (4) and “tooth” (32). But they found a more efficient way using calculations on clay tablets covered with sand, where they traced different numbers and calculations. Words were dispensed with and replaced with symbols. We wouldn’t recognize the symbols now, but they were the ancestors of the numbers we use now, and they used a place value system based on powers of 10. For an empty place they used a dot. A case has been made that they copied this system from a similar system used in China in the first millenium BCE.

The Hindus certainly weren’t the only people that used a place value system. The Maya had a place value system maybe as early as the first century CE. And the Sumerian cultures had nearly every part of a place value system as far back as the second millenium BCE.

It’s interesting to think about why we don’t use these systems now. I am a huge fan of the Mayans and love to talk about their math. But their numeral systems had a couple of weaknesses. They used powers of 20 instead of 10, which would have made it difficult to create things like multiplication tables. Worse, instead of making their third-lowest place 400 (20×20) they made it 360 (20×18) because that was close to the length of a year and they were mostly interested in keeping track of dates. Their numeral system could easily have overcome these weaknesses but the Classic Mayan culture crashed in the late first millenium CE and descending civilizations were decimated by European invaders.

The Sumerians had a better chance to make a numeral system that lasted, but their system lacked some important parts. Unlike both the Mayans and Indians they didn’t have a symbol for an empty place, so they just left it blank. If we did things like the Sumerians then 102 would be written as “1 2”. They also didn’t have a way to mark a unit place, even though they could do fractional places. The order of magnitude had to be figured out by context. If we did things this way then “432” could mean 0.432, 43.2, or 432,000. (In fact we do this verbally today: if I say a house costs “two-fifty” you assume one thing, if I say a slice of pizza costs “two-fifty” you assume something else.) Worse, they used base 60, meaning that a multiplication table would be impossible to memorize by anyone but a genius. Still, the Sumerian base-60 place value system continues to be used today in the way we count time, angles and latitude and longitude.

Still, the Hindu numeral system might not be the default today if not for the Persian genius Muhammad ibn Musa al-Khwarizmi. You might have heard of him as the inventor of algebra, and that is more or less true (really he got a lot of it from the Hindus). But that completely understates his impact. After his famous book al-Kitāb al-mukhtaṣar fī ḥisāb al-jabr wal-muqābala (The Compendious Book on Calculation by Completion and Balancing from which the term “algebra” comes), he wrote a few less famous but maybe even more influential couple of volumes whose original titles are unknown but were translated as “So Said Al-Khwarizmi” and “Al Khwarizmi on the Hindu Art of Reckoning.” These translations were the Europeans’ introduction to the algorithms of arithmetic we still use derivations of today: long addition, long multiplication, long division and so on.

So to rather than just saying al-Khwarizmi gave us algebra, it’s better to say that aK gave us nearly the entirety of what a layperson calls “math.” Is this a technology? Absolutely. There is nothing in the counting numbers that requires they be divided into powers of 10, as the Mayans and Babylonians could tell you. Arguably other place value systems might work even better: base 6 would have an easier times tables, base 12 would give us better fractional places and base 16 has the advantage of working with binary numbers central to computing.

But it’s because of this piece of technology that an average contemporary sixth grader can solve problems that would have only been accessible to the most advanced calculator in Roman times. It is because of this technology, of course, along with aK’s invention of algebra, that we have made so many other tools in math like the coordinate plane and calculus, and all of the practical inventions that have required complex calculations.

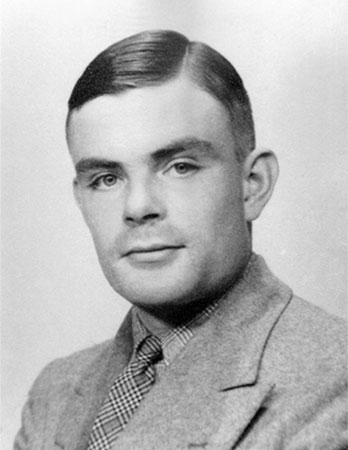

But the contribution doesn’t end there! In the early 20th century logician Kurt Gödel ruined the dreams of a generation of mathematicians by showing that in every logical mathematical system some true theorems couldn’t be proved. The British mathematician Alan Turing wondered if he could at least make a method to predict what those unprovable theorems could be. If this were possible perhaps we could place some logical land mine warnings around them. (Spoiler: you can’t do that either.)

This led Turing to ask what sort of things could be proven using a “mechanical process,” ie and algorithm. To think about this, he began by considering someone carrying out an algorithm in the style invented by al-Khwarizmi, like

23

x 15

He decided there was no reason this had to happen in two dimensions, so imagined instead a tape containing

23 x 15 =

from which a machine could carry out the algorithm by moving up and down the tape. This machine, known today as a “Turing machine,” is not a physical thing but rather a theoretical instrument that has the ability to calculate anything that’s capable of being computed mechanically.

“Computed mechanically” today of course means “can be done by a computer.” Turing didn’t invent the computer, rather he invented computation as we now understand it. But his method of computing began with the methods written by a Persian mathematician who’d studied the Hindus who maybe studied the Chinese.

We can’t say al-Kwarizmi invented our modern numbers and mathematical algorithms; rather he is similar to Euclid, who compiled different geometrical proofs (no doubt filling in blank spaces with his own genius) from Greek mathematicians into a compendious volume that shaped math for millennia to come. Certainly Euclid’s Elements and al-Kwarizmi’s “Compendious Book” are the most influential math textbooks of all time.

It’s surprising, if you think about it, that outside of specialized areas there is only a single numeral system that’s used in general today worldwide, regardless of culture or language spoken. Different countries might use different symbols for “2” and “4” but their calculations are all identical if you just change the symbols. This certainly suggests the power of this technology. And all of our science and invention, from molecular biology to Mars explorers, could not have been made if we couldn’t do the kind of calculations we can do.

But when students learn arithmetic, they are given this technology as if it has just been written into the universe with no invention necessary at all. I remember listening in shock when a commenter on a news channel said something like “give me an important invention that didn’t come from a European.” He’d never been taught that an invention we use today was created by Persians, Hindus and Chinese, because he’d never been taught it was an invention at all. We can do better than that.

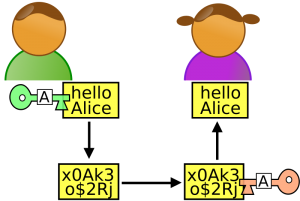

Recently I’ve been having students play around with some “toy” encryption programs, mostly simple double-Caesar encryptions, which is a good way to learn about text in Java. As with everything I started thinking and reading about encryption, and came to a terrifying realization: encryption for regular people is doomed.

Recently I’ve been having students play around with some “toy” encryption programs, mostly simple double-Caesar encryptions, which is a good way to learn about text in Java. As with everything I started thinking and reading about encryption, and came to a terrifying realization: encryption for regular people is doomed. much anyone, even the US government. It was based on a relatively simple mathematical principle: if you have a factor of two very big prime numbers (where ‘very big’ is at least 40 decimal digits, though for modern implementations more likely 150 or 300 digits) it’s almost impossible find those factors. Specifically, for a regular fast processor it would take millions of years of trials; a huge multi-core supercomputer might get it done in your lifetime but it would take years and the computer couldn’t be doing anything else.

much anyone, even the US government. It was based on a relatively simple mathematical principle: if you have a factor of two very big prime numbers (where ‘very big’ is at least 40 decimal digits, though for modern implementations more likely 150 or 300 digits) it’s almost impossible find those factors. Specifically, for a regular fast processor it would take millions of years of trials; a huge multi-core supercomputer might get it done in your lifetime but it would take years and the computer couldn’t be doing anything else. leverly published the code as a hardback book, which was indisputably protected by the First Amendment. Later court cases over similar encryption methods established the principle that code is protected by the First as well.

leverly published the code as a hardback book, which was indisputably protected by the First Amendment. Later court cases over similar encryption methods established the principle that code is protected by the First as well. talented coder, and soon was. Many other encryption algorithms have since been invented using some variation of Zimmerman’s prime number hack. PGP is still around as well; most recently Ed Snowden used a more advanced version of PGP to share his whistleblowing documents with Glenn Greenwald and Laura Poitras as documented in Poitras’ intense documentary Citizen4.

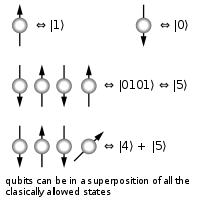

talented coder, and soon was. Many other encryption algorithms have since been invented using some variation of Zimmerman’s prime number hack. PGP is still around as well; most recently Ed Snowden used a more advanced version of PGP to share his whistleblowing documents with Glenn Greenwald and Laura Poitras as documented in Poitras’ intense documentary Citizen4. Lots of progress has been made in quantum computers, but as far as I know, no one has made a quantum computer that can execute Schor’s algorithm in that way. But you can be certain that the NSA and many other intelligence agencies are pouring an enormous amount of resources into solving this problem. In fact, if you were paying attention to the dates you might have noticed that Schor invented his algorithm within about a year of when charges were filed against Zimmerman for publishing PGP, so Schor certainly picked factoring large numbers as a challenging problem for a good reason.

Lots of progress has been made in quantum computers, but as far as I know, no one has made a quantum computer that can execute Schor’s algorithm in that way. But you can be certain that the NSA and many other intelligence agencies are pouring an enormous amount of resources into solving this problem. In fact, if you were paying attention to the dates you might have noticed that Schor invented his algorithm within about a year of when charges were filed against Zimmerman for publishing PGP, so Schor certainly picked factoring large numbers as a challenging problem for a good reason. In the early 90s, encryption was for spies and hackers; now it’s essential to every person who uses the net. In fact, Google Chrome will soon warn users that sites using plain http are insecure.

In the early 90s, encryption was for spies and hackers; now it’s essential to every person who uses the net. In fact, Google Chrome will soon warn users that sites using plain http are insecure. How often has this happened: you click on something you didn’t actually want to see (maybe because you were trying to scroll with your finger), causing an image to fill the screen obscuring whatever you were actually trying to read. Instinctively you hit the ‘back’ button to get back to whatever you were trying to look at.

How often has this happened: you click on something you didn’t actually want to see (maybe because you were trying to scroll with your finger), causing an image to fill the screen obscuring whatever you were actually trying to read. Instinctively you hit the ‘back’ button to get back to whatever you were trying to look at.

tc.), but realized that those aren’t the most important things.

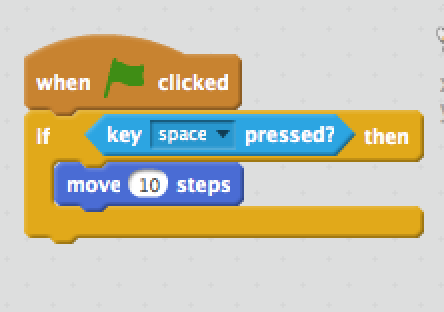

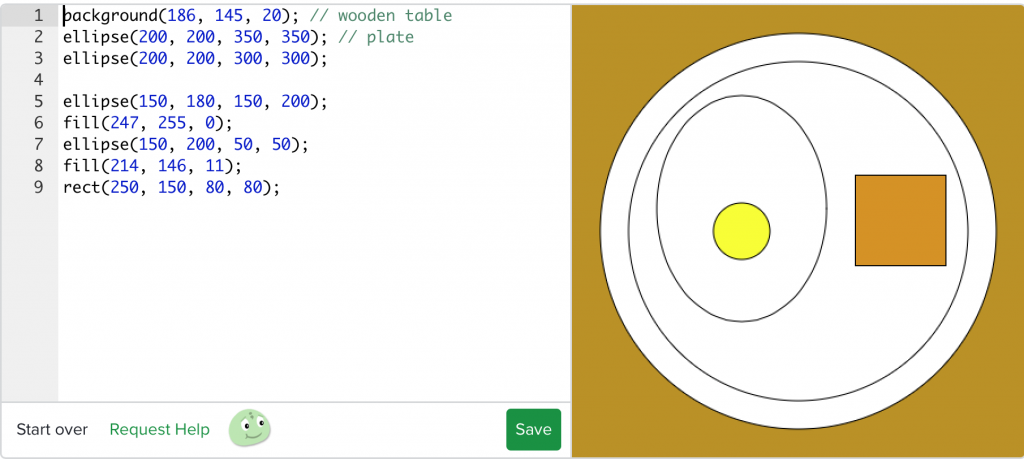

tc.), but realized that those aren’t the most important things. One of the things I highly recommend for this is the Khan Academy tech classes. It’s easy for the kids to log in with their Google Drive accounts and for you to create a class where you can monitor them. The classes are very self-directed, with videos accompanying an interactive programming window, so if students have headphones they can all proceed at a pace that works for them. As a teacher I’m sure you know that with any subject some students will take to it like a fish to water and others will have a great deal of difficulty. This is doubly true for programming.

One of the things I highly recommend for this is the Khan Academy tech classes. It’s easy for the kids to log in with their Google Drive accounts and for you to create a class where you can monitor them. The classes are very self-directed, with videos accompanying an interactive programming window, so if students have headphones they can all proceed at a pace that works for them. As a teacher I’m sure you know that with any subject some students will take to it like a fish to water and others will have a great deal of difficulty. This is doubly true for programming. This is part of a series on what is the best first ‘pro’ language, meaning something that’s actually used for professional applications.

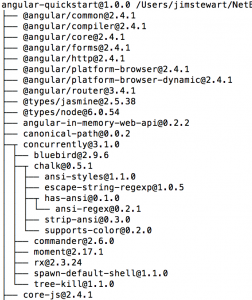

This is part of a series on what is the best first ‘pro’ language, meaning something that’s actually used for professional applications.  Every few months, it seems, everyone is excited about a new front-end “framework.” Frameworks are new downloadable modules of Javascript and CSS that come up with some (supposedly) better way of handling things in the DOM of various browsers. A few years ago everyone was using jQuery, which isn’t exactly a framework maybe but was an easy way to do a lot of visual things like dropdown menus and more importantly get fresh information from the server without loading a whole new page using AJAX. But Google had developed their own way to do that using a framework called Angular which is based on the Model View Controller web paradigm. At the same time Facebook developed a framework called React that wasn’t made for client-server interactions but which many people consider better than Angular for dealing with events in the user interface. Now Google has made Angular 2 which is very different from Angular.

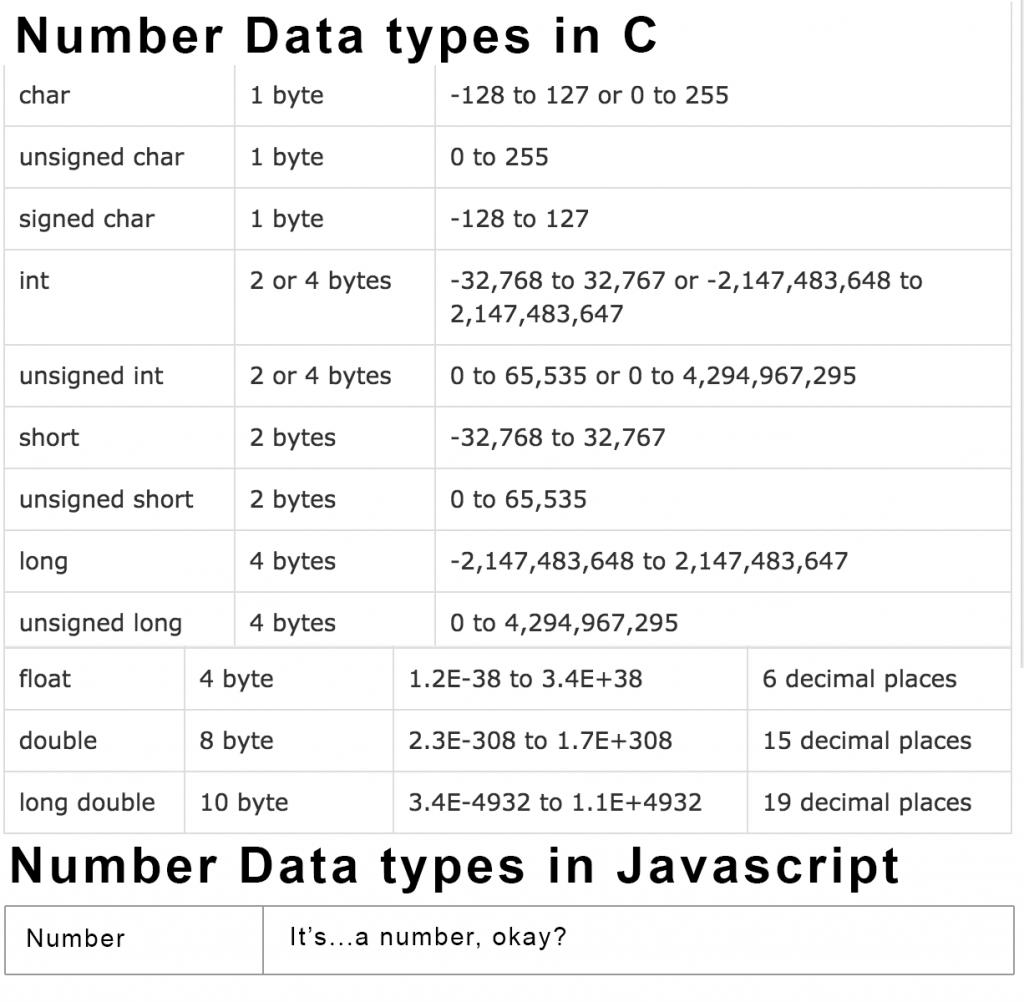

Every few months, it seems, everyone is excited about a new front-end “framework.” Frameworks are new downloadable modules of Javascript and CSS that come up with some (supposedly) better way of handling things in the DOM of various browsers. A few years ago everyone was using jQuery, which isn’t exactly a framework maybe but was an easy way to do a lot of visual things like dropdown menus and more importantly get fresh information from the server without loading a whole new page using AJAX. But Google had developed their own way to do that using a framework called Angular which is based on the Model View Controller web paradigm. At the same time Facebook developed a framework called React that wasn’t made for client-server interactions but which many people consider better than Angular for dealing with events in the user interface. Now Google has made Angular 2 which is very different from Angular. If you say x = 10 in Javascript then x’s datatype is…number. As far as JS is concerned x could become 87 billion or 0.0003. But as far as JS is concerned x could become the lyrics of the national anthem or a graphical DOM element. This is very, very far from the way a computer’s memory works, so when a student moves to a language that’s even implicitly typed like Python, let along statically typed like Java, they are going to be in for a terrible shock.

If you say x = 10 in Javascript then x’s datatype is…number. As far as JS is concerned x could become 87 billion or 0.0003. But as far as JS is concerned x could become the lyrics of the national anthem or a graphical DOM element. This is very, very far from the way a computer’s memory works, so when a student moves to a language that’s even implicitly typed like Python, let along statically typed like Java, they are going to be in for a terrible shock. HELLO

HELLO , so I studiously mimicked my co-teacher’s lessons, and soon learned a lot.

, so I studiously mimicked my co-teacher’s lessons, and soon learned a lot.